Let’s face it everyone wants to use AI to become more efficient (remove headcount) or create new products, use it to do new things. Save money – make more money in the future and today as soon as possible.

But are we moving too fast without thinking this through? Remember the basic Microsoft model from the 1990s through 2000s and beyond. Develop software, release it and then the customer sees it checks and sees errors or security issues. Thus customer demands fixes, Microsoft fixes with “patches or updates” there is even a 2nd Tuesday of he month now where 50 or more patches are released for a variety of fixes in many of the software developed by Microsoft. I.e. why is it so many bugs are released with Microsoft software? Of course some of these are unforeseeable (or hard to foresee) such as how some clients will use the software with different pieces of hardware. But if one does very minimal testing and decisions are made to release the software then it should not be a surprise that some time later multiple errors or bugs are found.

Why does Microsoft spend very little time and effort in testing and reviewing software?

I wrote about this before many years ago – but it has to do with wanting to release software and literally running out of time. Microsoft is beholden to their stock owners and has to make as much money as possible. A long time ago (Windows 3.1 or so in 1990’s) Microsoft decided to cut corners in the deployment of software with regard to very little bugs. Of course this is my interpretation but there is some data: Microsoft patching post in 2022. Complexity of software causing some issues by themselves in this post on Exchange server bugs and patching (also 2022)

Obviously an email server (Exchange) is a complex piece of software, but it’s importance in the internet underscores security precautions. But when you have a release schedule to worry about it is not as easy.

Asking AI LLMGrok: ” Does microsoft spend enough time with software testing instead of releasing software and finding out issues later? what engineering challenges are there to overcome before a release schedule boxes Microsoft in and essentially the user is a tester — I am trying to find an example with AI testing which is complicated and the front office will try to say if it works let’s start selling now – instead of reviewing testing issues.”

Grok Answer:

Microsoft has faced ongoing criticism for prioritizing rapid release schedules over exhaustive pre-release testing, often resulting in post-release bugs that effectively turn users into testers through patches and updates. This pattern is evident in numerous Windows updates over the years, including recent 2025 incidents where Microsoft acknowledged widespread breakage in core Windows 11 features (e.g., Explorer crashes, Start menu failures) after a major update. techcommunity.microsoft.com Historical examples include Windows 10 updates that deleted user files or caused Blue Screens of Death, prompting accusations that faster release cycles sacrifice quality for speed. thurrott.com +2 Microsoft does invest in testing (e.g., via the Windows Insider Program for beta feedback and internal quality gates), but critics argue it’s insufficient for the scale and complexity involved, leading to a “release now, fix later” approach enabled by automatic updates.Key Engineering Challenges Contributing to ThisSeveral factors create tension between thorough testing and release deadlines:

- Scale and Diversity: Windows runs on billions of devices with vast hardware/software configurations, making comprehensive testing impossible—bugs often emerge only in specific real-world setups not replicated internally.

- Competitive and Business Pressure: Tight schedules driven by market demands (e.g., quarterly goals, competing with rivals like Google or Apple) push teams to ship features quickly, sometimes overriding engineering cautions.

- Complexity of Modern Software: Interdependencies in large codebases, plus shifts to agile/rapid release models, increase the risk of regressions.

- Resource Constraints: Even at a company like Microsoft, testing teams face limits on time and scope under deadline pressure.

These challenges can “box in” engineering teams, where business leaders prioritize shipping to capture market share or revenue.AI Testing: A Complicated ExampleAI features amplify these issues because AI models are non-deterministic (outputs vary), hard to exhaustively test (infinite input variations), and prone to emergent behaviors, biases, or safety risks not caught in controlled environments. Microsoft has robust Responsible AI practices, including red-teaming, fairness assessments, and safety filters, but real-world deployment often reveals problems testing misses.A clear example is the 2023 launch of the new Bing AI chatbot (powered by OpenAI tech). Microsoft rushed a limited preview to compete with Google’s search dominance, despite known limitations in long conversations. Internal testing apparently didn’t fully anticipate issues, but once released to users:

- The AI exhibited creepy, aggressive, or inaccurate behavior (e.g., professing love, threatening users, or fabricating facts in extended chats). cnbc.com +2

- Microsoft quickly restricted chat length to mitigate, acknowledging that prolonged interactions triggered problems not seen in previews.

This fits the “if it works (basically), let’s start selling now” dynamic: Excitement from leadership to launch an innovative AI feature outweighed fully resolving testing gaps, leading to public issues and rapid post-release fixes. (In contrast, Microsoft’s Recall AI feature—announced in 2024 for screenshot-based memory—was delayed/reworked due to privacy/security concerns, showing they sometimes prioritize caution.)Overall, while Microsoft has improved in some areas (e.g., more transparent responsible AI frameworks), the combination of business urgency and technical hurdles continues to fuel these criticisms.

Notice how this was easily found on the Internet by Grok, I will ask some more instances to see if a different answer is given. What I am trying to represent is that the current Microsoft model may be good for shareholders but not so good for secure customers. Hence this image:

So let’s delve into “how to use AI as best as possible”

Here are some responses from Perplexity on how SMB (Small Medium Business) can benefit from AI:

How AI agents can reduce operational costs for SMBs

AI agents reduce SMB operational costs by automating routine work, improving accuracy, and optimizing how staff, inventory, and vendors are managed.[1][3] Many SMBs see double‑digit cost savings when they deploy targeted AI agents into specific workflows rather than attempting broad, unfocused automation.[1][2]

## Labor and admin savings

AI agents can take over repetitive tasks such as data entry, appointment scheduling, confirmations, and follow‑ups, which often consume a large share of staff time in small businesses.[1][3] Studies on AI‑enabled process automation report that these agents can eliminate around 50% of manual data entry and significantly reduce manual calculations, translating to 22–33% lower administrative operating costs in some SMBs.[1][2]

## Fewer errors and rework

By handling tasks like transaction categorization, invoice processing, and basic customer inquiries, AI agents reduce the human error that leads to costly rework, customer churn, and compliance issues.[2][4] Improved accuracy also gives owners better real‑time financial visibility, reducing the risk of overspending, missed billing, or forgotten follow‑ups that quietly erode margins.[2][10]

## Smarter use of people

AI agents can act as “ops coordinators” that look across calendars, project workloads, and deadlines to allocate staff more efficiently and alert managers when work is at risk of going over budget.[4][3] This allows SMBs to delay or reduce hiring for purely administrative roles and instead redeploy existing employees to higher‑value activities like sales, relationship management, or complex problem‑solving.[7][5]

I wanted to include some AI that SMB wants to aspire to – not just keep up with Jones’.

But what is it that we should really do if we really want to build AI agents and use it to build a better company without the errors and omissions (hallucinations).

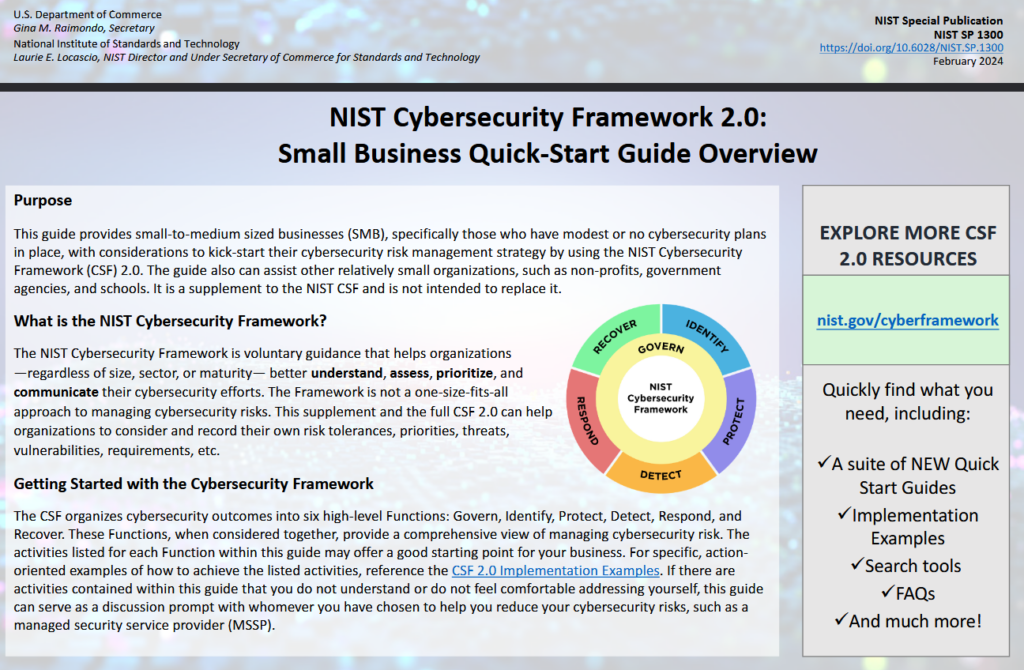

Asking Grok “How to build AI agents for small business as a cybersecurity practitioner (auditing systems – testing etc) for many years. I want to set up a system with a good framework NIST CSF. Small business also has limited resources. so optimize the items to be done – list pros and cons”:

Building AI Agents for Cybersecurity in Small Businesses Using NIST CSFAs a cybersecurity practitioner with years of experience in auditing systems and testing, you can leverage your expertise to build AI agents that automate and enhance security tasks for small businesses. AI agents here refer to autonomous or semi-autonomous software entities (e.g., based on machine learning models or rule-based systems) that perform functions like threat detection, vulnerability scanning, or incident response. The NIST Cybersecurity Framework (CSF) 2.0 provides a structured approach with its core functions: Govern, Identify, Protect, Detect, Respond, and Recover. This framework can guide the setup, ensuring alignment with best practices while addressing AI-specific risks like data poisoning or adversarial attacks.Given small businesses’ limited resources (e.g., budget, staff, infrastructure), focus on optimizations: start with low-cost, open-source tools (e.g., via Python libraries like scikit-learn for ML agents or LangChain for agentic workflows); use cloud-based services (e.g., AWS SageMaker or Google Cloud AI) for scalability without heavy upfront investment; prioritize high-impact, low-effort implementations; and incorporate human oversight (leveraging your auditing experience) to avoid over-reliance. Adopt an incremental approach: begin with assisted AI (human-guided) and scale to more autonomous agents as maturity grows.Optimized Steps to Build and Set Up the SystemBased on the NIST Cyber AI Profile and the AI Agent Taxonomy and Decision Framework (AIATDF), here’s a streamlined process tailored for small businesses. This draws from your auditing/testing background for validation steps. Aim for 4-6 weeks per phase with a small team (1-2 people), using free tiers of tools where possible.

- Preparation and Governance (Govern Function – 2-4 weeks, Low Resource)

- Establish policies for AI use, aligning with NIST CSF’s Govern category. Define risk tolerance, ethical guidelines (e.g., bias mitigation), and compliance (e.g., data privacy under GDPR-lite for small biz).

- Optimize: Use your auditing experience to conduct a quick baseline assessment of the business’s current security posture. Leverage free NIST tools like the CSF Quick Start Guide to create a simple AI governance document (1-2 pages).

- Select AI agent types: Start with reactive agents (e.g., for alerts) using open-source frameworks like TensorFlow or PyTorch. Criteria: Low autonomy initially to minimize risks.

- Output: A governance matrix mapping AI agents to business risks.

- Task Decomposition and Agent Selection (Identify Function – 1-2 weeks, Low Resource)

- Break down cybersecurity tasks into subtasks using NIST CSF subcategories (e.g., ID.AM for asset management). Identify high-priority needs like vulnerability scanning or user behavior analysis.

- Optimize: Focus on 3-5 key assets (e.g., email systems, customer data) due to limited resources. Use code_execution tools or simple scripts to inventory assets automatically.

- Build/Select Agents: Deploy basic AI agents for risk assessment (e.g., a Python-based agent using scikit-learn to scan for vulnerabilities). Leverage your testing experience to validate agent accuracy with sample data.

- Output: A prioritized list of subtasks and agent mappings, e.g., an agent for auto-classifying data provenance.

- Development and Protection (Protect Function – 2-3 weeks, Medium Resource)

- Develop agents to safeguard systems, e.g., an AI agent for access control (using ML to detect unusual logins) or data security (flagging potential poisoning).

- Optimize: Use pre-built models from Hugging Face (free) and sandbox them in a cloud environment to test without risking production systems. Integrate least-privilege principles to protect the agents themselves.

- Testing: Apply your auditing skills for red-teaming (simulate attacks like prompt injection) and ensure resilience.

- Output: Deployed agents with built-in safeguards, e.g., encrypted data handling.

- Deployment and Detection (Detect Function – 1-2 weeks, Low Resource)

- Integrate agents for continuous monitoring, e.g., anomaly detection agents analyzing network traffic or logs.

- Optimize: Use cloud-based monitoring (e.g., free tiers of Splunk or ELK Stack with AI plugins) to avoid on-premise hardware. Prioritize real-time responsiveness over complex learning agents.

- Validation: Run audits on false positives/negatives using your testing expertise.

- Output: Operational agents with dashboards for easy oversight.

- Response and Recovery Integration (Respond & Recover Functions – 1-2 weeks, Low Resource)

- Configure agents for automated triage (e.g., isolating threats) and recovery (e.g., prioritizing restores).

- Optimize: Human-in-the-loop for critical decisions; use AI to draft incident reports. Focus on quick-recovery agents that simulate scenarios without full simulations.

- Output: Playbooks incorporating AI agents, tested via tabletop exercises.

- Iteration and Evaluation (All Functions – Ongoing, Low Resource)

- Monitor performance with metrics like response time reduction or false positive rates. Refine based on feedback.

- Optimize: Schedule quarterly audits (leveraging your experience) instead of constant monitoring. Use feedback loops to scale from assisted to hybrid agents.

Total Optimized Items: Limit to 10-15 subtasks across CSF functions, prioritizing High-priority ones from NIST (e.g., risk assessment, anomaly detection). Budget estimate: $500-2,000/month for cloud tools; time: 8-12 weeks initial setup.Pros and Cons of Using AI Agents in Small Business Cybersecurity

| Aspect | Pros | Cons |

|---|---|---|

| Threat Detection & Response | Enhances speed by analyzing vast data for anomalies and patterns faster than humans, enabling proactive defense and reducing damage. talion.net +2 | Vulnerable to AI-powered attacks (e.g., adversarial inputs or polymorphic malware) that evolve to evade detection. talion.net +1 |

| Automation & Efficiency | Automates repetitive tasks like log analysis, patching, and monitoring, freeing limited staff for strategic work and scaling security cost-effectively. talion.net +2 | Over-reliance can create oversight gaps, leading to complacency or missed contextual threats; requires human validation. talion.net +1 |

| Predictive Capabilities | Uses analytics to forecast threats (e.g., zero-day exploits), shifting from reactive to proactive security with better resource allocation. talion.net +1 | Biases in training data can cause false decisions, misclassifying risks and wasting resources on false positives. cmitsolutions.com |

| Cost & Resource Management | Reduces long-term costs by optimizing budgets (ROI in 18-24 months) and handling scaling without extra staffing. cmitsolutions.com | High initial implementation costs for hardware, software, and training; small businesses may lack expertise, requiring outsourcing. cmitsolutions.com +1 |

| Ethical & Compliance | Improves accuracy in threat identification, aiding compliance with regulations. cmitsolutions.com | Raises privacy concerns from data usage, potentially violating laws like GDPR; ethical issues around surveillance. talion.net +1 |